Model Tuner Library Instructions¶

This notebook provides a guide on how to install and use the model_tuner library in a notebook environment like Google Colab.

Model Tuner Description¶

The model_tuner library is designed to streamline the process of hyperparameter tuning and model optimization for machine learning algorithms. It provides an easy-to-use interface for defining, tuning, and evaluating models.

Documentation¶

For detailed documentation and advanced usage of the model_tuner library, please refer to the model_tuner documentation.

By following these steps, you should be able to install and use the model_tuner library effectively in your notebook environment. If you encounter any issues or have further questions, feel free to reach out for support.

Installation¶

To install the model_tuner library, use the following command:

! pip install model_tuner

! pip install sns

Importing the Library¶

After installation, you can import the necessary components from the model_tuner library as shown below:

from model_tuner import Model

import seaborn as sns

from sklearn.ensemble import RandomForestClassifier

from sklearn.impute import SimpleImputer

Binary Classification with the titanic dataset and Pipeline¶

titanic = sns.load_dataset('titanic')

titanic.head()

X = titanic[[col for col in titanic.columns if col != "survived"]]

### Removing repeated data

X = X.drop(columns=['alive', 'class', 'embarked'])

y = titanic['survived']

rf = RandomForestClassifier(class_weight="balanced")

estimator_name = "rf"

rf_pipeline_hyperparams_grid = {

f"{estimator_name}__max_depth": [3, 5, 10, None],

f"{estimator_name}__n_estimators": [10, 100, 200],

f"{estimator_name}__max_features": [1, 3, 5, 7],

f"{estimator_name}__min_samples_leaf": [1, 2, 3],

}

Defining pipeline steps¶

Here we look at the columns of the data and work out what data points need what sort of preprocessing, for example we may want to scale the continuous input data. The ordinal data will need converting to appropriate numbers e.g. A-> 0 B-> 1, C-> 3. Or the otherway around. The other categorical data needs one hot encoding.

This can be done easily through the pipeline so that we can ensure there is no data leakage.

This also allows us to handle missing data when it comes to predicting. Using the OneHotEncoder with handle_unknown set to ignore will generate a new empty column if we have missing data.

We also set impute to True this helps us handle missing data by automatically imputing it with the mean. This step can be removed and a custom imptuer can be used through the pipeline_steps if necessary.

X.head()

from sklearn.preprocessing import OneHotEncoder, OrdinalEncoder

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import MinMaxScaler

from sklearn.impute import SimpleImputer

from sklearn.pipeline import Pipeline

# Define columns

ohcols = [

"embark_town",

"who",

"sex",

"adult_male"

]

ordcols = [

"deck"

]

scalercols = [

"parch",

"fare",

"age",

"pclass"

]

# Create the pipeline for categorical features

categorical_transformer = Pipeline(

steps=[

("imputer", SimpleImputer(strategy="constant", fill_value="missing")),

("onehot", OneHotEncoder(handle_unknown="ignore")),

]

)

# Create the pipeline for ordinal features

ordinal_transformer = Pipeline(

steps=[

("imputer", SimpleImputer(strategy="most_frequent")),

("ordinal", OrdinalEncoder())

]

)

# Create the pipeline for numeric features (imputation followed by scaling)

numeric_transformer = Pipeline(

steps=[

("imputer", SimpleImputer(strategy="mean")),

("scaler", MinMaxScaler())

]

)

# Define the ColumnTransformer

ct = ColumnTransformer(

transformers=[

("OneHotEncoder", categorical_transformer, ohcols),

("OrdinalEncoder", ordinal_transformer, ordcols),

("Numeric", numeric_transformer, scalercols),

],

remainder='passthrough' # Keep other columns unchanged

)

# Initialize titanic_model

titanic_model_rf = Model(

name="RandomForest_Titanic",

estimator_name=estimator_name,

calibrate=True,

model_type="classification",

estimator=rf,

kfold=False,

pipeline_steps=[("Preproccesor", ct)],

stratify_y=True,

grid=rf_pipeline_hyperparams_grid,

randomized_grid=True,

n_iter=5,

scoring=["roc_auc"],

random_state=42,

n_jobs=-1,

)

titanic_model_rf.grid_search_param_tuning(X, y, f1_beta_tune=True)

X_train, y_train = titanic_model_rf.get_train_data(X, y)

X_valid, y_valid = titanic_model_rf.get_valid_data(X, y)

X_test, y_test = titanic_model_rf.get_test_data(X, y)

titanic_model_rf.fit(X_train, y_train)

prob_uncalibrated = titanic_model_rf.predict_proba(X_test)[:, 1]

if titanic_model_rf.calibrate == True:

titanic_model_rf.calibrateModel(X, y)

metrics = titanic_model_rf.return_metrics(X_test, y_test)

titanic_model_rf.threshold

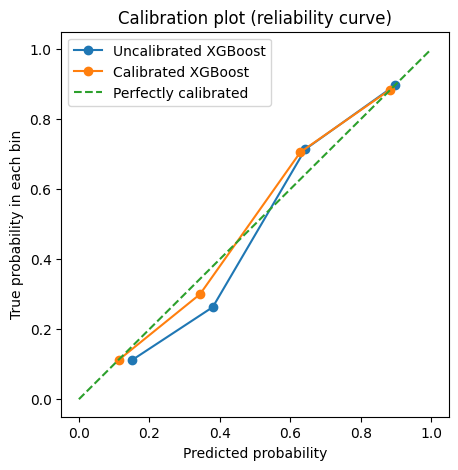

Calibrating Model¶

from matplotlib import pyplot as plt

from sklearn.calibration import calibration_curve

## Get the predicted probabilities for the validation data from calibrated model

y_prob_calibrated = titanic_model_rf.predict_proba(X_test)[:, 1]

## Compute the calibration curve for the calibrated model

prob_true_calibrated, prob_pred_calibrated = calibration_curve(

y_test,

y_prob_calibrated,

n_bins=4,

)

prob_true_uncalibrated, prob_pred_uncalibrated = calibration_curve(

y_test,

prob_uncalibrated,

n_bins=4,

)

## Plot the calibration curves

plt.figure(figsize=(5, 5))

plt.plot(

prob_pred_uncalibrated,

prob_true_uncalibrated,

marker="o",

label="Uncalibrated XGBoost",

)

plt.plot(

prob_pred_calibrated,

prob_true_calibrated,

marker="o",

label="Calibrated XGBoost",

)

plt.plot([0, 1], [0, 1], linestyle="--", label="Perfectly calibrated")

plt.xlabel("Predicted probability")

plt.ylabel("True probability in each bin")

plt.title("Calibration plot (reliability curve)")

plt.legend()

plt.show()

KFold?¶

If we want to use KFold we can simply set the kfold parameter to True this will automatically split the data accordingly.

## Initialize titanic_model

titanic_model_kf = Model(

name="RandomForest_Titanic",

estimator_name=estimator_name,

calibrate=True,

model_type="classification",

estimator=rf,

kfold=True,

pipeline_steps=[("ColumnTransformer", ct)],

stratify_y=False,

n_splits=10,

grid=rf_pipeline_hyperparams_grid,

randomized_grid=True,

n_iter=5,

scoring=["roc_auc"],

random_state=42,

n_jobs=-1,

)

#### When using KFold, X and y are passed as a whole to the fit method as they

#### are split within this into the separate folds.

#### The metrics are assessed over each fold and averaged.

titanic_model_kf.grid_search_param_tuning(X, y, f1_beta_tune=True)

#### When using KFold, X and y are passed as a whole to the fit method as they

#### are split within this into the separate folds.

#### The metrics are assessed over each fold and averaged.

titanic_model_kf.fit(X, y)

titanic_model_kf.threshold

titanic_model_kf.return_metrics(X, y)