Calibration Curves and Area Under the Curve

Understanding the mathematical intuition behind calibration curves and related metrics helps clarify their diagnostic value in evaluating model reliability. This section outlines foundational concepts using simplified examples, progressing toward their real-world interpretation in model evaluation.

Calibration Curves and Area Interpretation

Calibration curves visualize how well predicted probabilities align with actual outcomes. A perfectly calibrated model lies along the diagonal line, where predicted probability equals observed frequency.

Below are two manual examples using toy functions to illustrate the concept of area under the calibration curve, a key component of metrics like Calibration AUC.

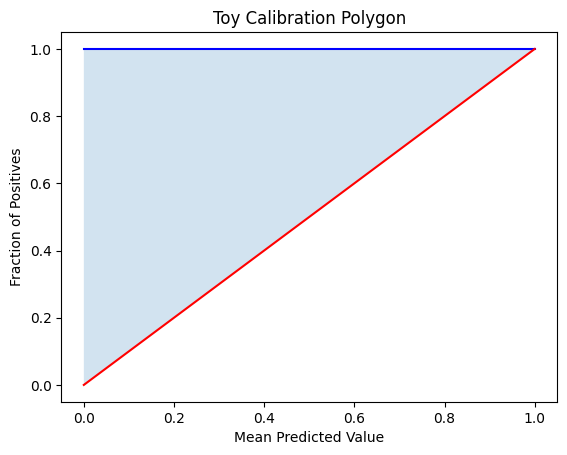

Example 1: Calibration with y = x²

This function simulates underconfident predictions, where the model consistently underestimates risk.

To compute the calibration area under this curve from ( x = 0 ) to ( x = 1 ):

Solution:

The area under the ideal calibration line (diagonal) is:

So, the polygonal calibration AUC becomes:

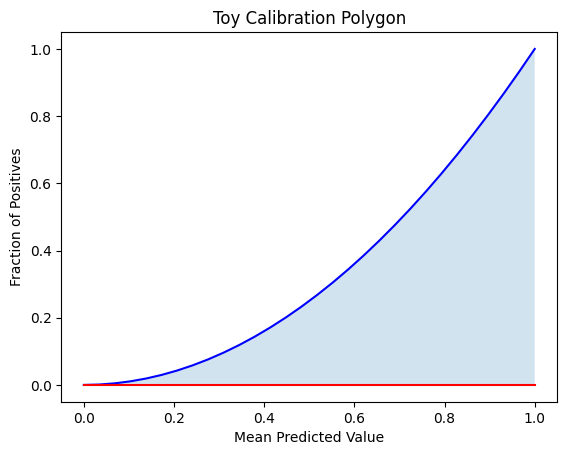

Example 2: Calibration with y = x² + 4x

This toy example models overconfident predictions, where the model consistently overshoots risk.

To calculate the area under the curve from ( x = 0 ) to ( x = 1 ), we compute the definite integral:

Solution:

We split the integral into two separate parts:

First Integral:

Second Integral:

Final Answer:

This result represents the total area under the curve \(y = x^2 + 4x\) over the interval \([0, 1]\). If comparing against the ideal calibration line \(( y = x)\), you would subtract the diagonal area \(( \frac{1}{2})\) to isolate the calibration polygon AUC.

Note

In real calibration plots, the area is bounded within [0,1] on both axes. This example is meant to illustrate the mechanics of integration over a custom curve.

Regression Residuals

These residuals are used to compute various point estimate metrics that summarize model performance on a given dataset. Common examples include:

Mean Absolute Error (MAE):

\[\text{MAE} = \frac{1}{n} \sum_{i=1}^n \left| y_i - \hat{y}_i \right|\]Mean Squared Error (MSE):

\[\text{MSE} = \frac{1}{n} \sum_{i=1}^n \left( y_i - \hat{y}_i \right)^2\]Root Mean Squared Error (RMSE):

\[\text{RMSE} = \sqrt{\text{MSE}}\]

These are considered point estimates because they provide single-value summaries of the model’s residual error without incorporating uncertainty or sampling variability. To assess the stability or confidence of these estimates, techniques such as bootstrapping can be used to generate distributions over repeated samples.