iPython Notebooks

Binary Classification Examples

Regression Example

Google Colab Notebook

HTML File

Note

This class is designed to be flexible and can be extended to include additional functionalities or custom metrics.

It is essential to properly configure the parameters during initialization to suit the specific requirements of your machine learning task.

Ensure that all dependencies are installed and properly imported before using the

Modelclass from themodel_tunerlibrary.

Input Parameters

- class Model(name, estimator_name, estimator, model_type, calibrate=False, kfold=False, imbalance_sampler=None, train_size=0.6, validation_size=0.2, test_size=0.2, stratify_y=False, stratify_cols=None, grid=None, scoring=['roc_auc'], n_splits=10, random_state=3, n_jobs=1, display=True, randomized_grid=False, n_iter=100, pipeline_steps=[], boost_early=False, feature_selection=False, class_labels=None, multi_label=False, calibration_method='sigmoid', custom_scorer=[], bayesian=False)

A class for building, tuning, and evaluating machine learning models, supporting both classification and regression tasks, as well as multi-label classification.

- Parameters:

name (str) – A unique name for the model, helpful for tracking outputs and logs.

estimator_name (str) – Prefix for the estimator in the pipeline, used for setting parameters in tuning (e.g., estimator_name +

__param_name).estimator (object) – The machine learning model to be trained and tuned.

model_type (str) – Specifies the type of model, must be either

classificationorregression.calibrate (bool, optional) – Whether to calibrate the model’s probability estimates. Default is

False.kfold (bool, optional) – Whether to perform k-fold cross-validation. Default is

False.imbalance_sampler (object, optional) – An imbalanced data sampler from the imblearn library, e.g.,

RandomUnderSamplerorRandomOverSampler.train_size (float, optional) – Proportion of the data to be used for training. Default is

0.6.validation_size (float, optional) – Proportion of the data to be used for validation. Default is

0.2.test_size (float, optional) – Proportion of the data to be used for testing. Default is

0.2.stratify_y (bool, optional) – Whether to stratify by the target variable during data splitting. Default is

False.stratify_cols (str, list, or pandas.DataFrame, optional) – Columns to use for stratification during data splitting. Can be a single column name (as a string), a list of column names (as strings), or a DataFrame containing the columns for stratification. Default is

None.grid (list of dict) – Hyperparameter grid for model tuning, supporting both regular and Bayesian search.

scoring (list of str) – List of scoring metrics for evaluation, e.g.,

["roc_auc", "accuracy"].n_splits (int, optional) – Number of splits for k-fold cross-validation. Default is

10.random_state (int, optional) – Seed for random number generation to ensure reproducibility. Default is

3.n_jobs (int, optional) – Number of parallel jobs to run for model fitting. Default is

1.display (bool, optional) – Whether to print messages during the tuning and training process. Default is

True.randomized_grid (bool, optional) – Whether to use randomized grid search. Default is

False.n_iter (int, optional) – Number of iterations for randomized grid search. Default is

100.pipeline_steps (list, optional) – List of steps for the pipeline, e.g., preprocessing and feature selection steps. Default is

[].boost_early (bool, optional) – Whether to enable early stopping for boosting algorithms like XGBoost. Default is

False.feature_selection (bool, optional) – Whether to enable feature selection. Default is

False.class_labels (list, optional) – List of labels for multi-class classification. Default is

None.multi_label (bool, optional) – Whether the task is a multi-label classification problem. Default is

False.calibration_method (str, optional) – Method for calibration; options include

sigmoidandisotonic. Default issigmoid.custom_scorer (dict, optional) – Dictionary of custom scoring functions, allowing additional metrics to be evaluated. Default is

[].bayesian (bool, optional) – Whether to perform Bayesian hyperparameter tuning using

BayesSearchCV. Default isFalse.

- Raises:

ImportError – If the

bootstrappermodule is not found or not installed.ValueError – Raised for various issues, such as: - Invalid

model_typevalue. Themodel_typemust be explicitly specified as eitherclassificationorregression. - Invalid hyperparameter configurations or mismatchedXandyshapes.AttributeError – Raised if an expected pipeline step is missing, or if

self.estimatoris improperly initialized.TypeError – Raised when an incorrect parameter type is provided, such as passing

Noneinstead of a valid object.IndexError – Raised for indexing issues, particularly in confusion matrix formatting functions.

KeyError – Raised when accessing dictionary keys that are not available, such as missing scores in

self.best_params_per_score.RuntimeError – Raised for unexpected issues during model fitting or transformations that do not fit into the other exception categories.

Key Methods and Functionalities

__init__(...)

Initializes the model tuner with configurations, including estimator, cross-validation settings, scoring metrics, pipeline steps, feature selection, imbalance sampler, Bayesian search, and model calibration options.

reset_estimator()

- reset_estimator()

Resets the estimator and pipeline configuration.

Description:

This function reinitializes the

estimatorattribute of the class based on the current pipeline configuration.If

pipeline_stepsare defined, it creates a new pipeline usingself.PipelineClassand a deep copy of the steps.If

pipeline_stepsare not defined, it resets theestimatorto a single-step pipeline containing the original estimator.

Behavior:

If

self.pipeline_stepsis not empty:Creates a pipeline using the defined steps.

If

self.pipeline_stepsis empty:Resets the

estimatorto a single-step pipeline with the original estimator.

Attributes Used:

self.pipeline_steps: The steps of the pipeline (if defined).self.PipelineClass: The class used to construct pipelines.self.estimator_name: The name of the primary estimator step.self.original_estimator: The original estimator to be reset.

Output:

The function updates the

self.estimatorattribute and does not return a value.

Notes:

This function is intended for internal use as a helper function to manage pipeline and estimator states.

Ensures that the pipeline or estimator is always in a valid state after modifications or resets.

process_imbalance_sampler()

- process_imbalance_sampler(X_train, y_train)

Processes the imbalance sampler, applying it to resample the training data.

- Parameters:

X_train (

pandas.DataFrameor array-like) – Training features to be resampled.y_train (

pandas.Seriesor array-like) – Training target labels to be resampled.

- Raises:

KeyError – Raised if the

resamplerstep is missing in the pipeline.ValueError – Raised if

X_trainory_trainare incompatible with the pipeline or resampler.

Output:

Prints the class distribution of

y_trainafter resampling.Does not modify the original

X_trainory_train.

Description:

This function applies an imbalance sampler to resample the training data, ensuring the target distribution is balanced.

If preprocessing steps are defined in the pipeline, they are applied to the training features before resampling.

Prints the distribution of

y_trainafter resampling to provide visibility into the balance of classes.

Behavior:

If preprocessing steps exist (

self.pipeline_steps):Applies preprocessing to

X_trainusing the preprocessing pipeline obtained fromget_preprocessing_pipeline().

Clones the

resamplerstep from the pipeline to ensure independent operation.Resamples the training data using the cloned resampler, modifying the distribution of

y_train.

Attributes Used:

self.pipeline_steps: Indicates whether preprocessing steps are defined.self.get_preprocessing_pipeline(): Retrieves the preprocessing pipeline (if available).self.estimator.named_steps["resampler"]: The resampler to apply for balancing the target classes.

Note

The function assumes that the pipeline includes a valid

resamplerstep undernamed_steps.Ensures compatibility with

pandas.DataFrameand array-like structures fory_train.Prints the class distribution of

y_trainafter resampling for user awareness.

calibrateModel()

- calibrateModel(X, y, score=None)

Calibrates the model with cross-validation support and configurable calibration methods, improving probability estimates.

- Parameters:

- Raises:

ValueError – Raised if incompatible parameters (e.g., invalid scoring metric) are passed.

KeyError – Raised if required attributes or parameters are missing.

Description:

Supports model calibration with both k-fold cross-validation and a pre-split train-validation-test workflow.

Uses

CalibratedClassifierCVfor calibration with methods such assigmoidorisotonic(defined byself.calibration_method).Handles cases where imbalance sampling or early stopping is applied during training.

Provides additional support for CPU/GPU device management if applicable.

Behavior:

With K-Fold Cross-Validation:

Resets the estimator to avoid conflicts with pre-calibrated models.

Calibrates the model using k-fold splits with the configured calibration method.

Optionally evaluates calibration using the provided scoring metric(s).

Generates and prints confusion matrices for each fold (if applicable).

Without K-Fold Cross-Validation:

Performs a train-validation-test split using

train_val_test_split.Resets the estimator and applies preprocessing or imbalance sampling if configured.

Fits the model on training data, with or without early stopping.

Calibrates the pre-trained model on the test set and evaluates calibration results.

Attributes Used:

self.kfold: Indicates whether k-fold cross-validation is enabled.self.calibrate: Determines whether calibration is applied.self.calibration_method: Specifies the calibration method (e.g.,sigmoidorisotonic).self.best_params_per_score: Stores the best parameters for each scoring metric.self.n_splits: Number of splits for cross-validation.self.stratify_y,self.stratify_cols: Used for stratified train-validation-test splitting.self.imbalance_sampler: Indicates if an imbalance sampler is applied.self.boost_early: Enables early stopping during training.

Output:

Modifies the class attribute

self.estimatorto include the calibrated model.Generates calibration reports and scoring metrics if applicable.

Prints performance metrics (e.g., scores and confusion matrices) for the calibrated model.

Note

When

scoreis provided, the function evaluates calibration using the specified metric(s).Requires the estimator to be compatible with

CalibratedClassifierCV.Handles both balanced and imbalanced datasets with preprocessing support.

Get train, val, test data

Description:

These functions return subsets of the dataset (features and labels) based on predefined indices stored in the class attributes:

self.X_train_indexandself.y_train_indexfor training data.self.X_valid_indexandself.y_valid_indexfor validation data.self.X_test_indexandself.y_test_indexfor test data.

Designed to work with

pandas.DataFrameandpandas.Seriesobjects.

get_train_data()

- get_train_data(X, y)

Retrieves the training data based on specified indices.

- Parameters:

X (

pandas.DataFrame) – Full dataset containing features.y (

pandas.Series) – Full dataset containing target labels.

- Returns:

A tuple containing the training features and labels.

- Return type:

tuple of (

pandas.DataFrame,pandas.Series)

get_valid_data()

- get_valid_data(X, y)

Retrieves the validation data based on specified indices.

- Parameters:

X (

pandas.DataFrame) – Full dataset containing features.y (

pandas.Series) – Full dataset containing target labels.

- Returns:

A tuple containing the validation features and labels.

- Return type:

tuple of (

pandas.DataFrame,pandas.Series)

get_test_data()

- get_test_data(X, y)

Retrieves the test data based on specified indices.

- Parameters:

X (

pandas.DataFrame) – Full dataset containing features.y (

pandas.Series) – Full dataset containing target labels.

- Returns:

A tuple containing the test features and labels.

- Return type:

tuple of (

pandas.DataFrame,pandas.Series)

Note

These methods assume that the indices (e.g.,

self.X_train_index) are defined and valid.The methods return subsets of the provided

Xandydata by indexing the rows based on the stored indices.Useful for workflows where train, validation, and test splits are dynamically managed or predefined.

calibrate_report()

- calibrate_report(X, y, score=None)

Generates a calibration report, including a confusion matrix and classification report.

- Parameters:

X (

pandas.DataFrameor array-like) – Features dataset for validation.y (

pandas.Seriesor array-like) – True labels for the validation dataset.score (str, optional) – Optional scoring metric name to include in the report. Default is

None.

- Raises:

ValueError – Raised if the provided

Xoryare incompatible with the model or metrics.

Description:

This method evaluates the performance of a calibrated model on the validation dataset.

Generates and prints: - A confusion matrix, with support for multi-label classification if applicable. - A classification report summarizing precision, recall, and F1-score for each class.

Behavior:

Calls the

predictmethod to obtain predictions for the validation dataset.Uses

confusion_matrixormultilabel_confusion_matrixbased on the value ofself.multi_labelto compute the confusion matrix.Prints a labeled confusion matrix using the

_confusion_matrix_print()function.Generates a classification report using

classification_reportfromsklearn.metricsand assigns it to theself.classification_reportattribute.

Output:

Prints the following to the console:

The confusion matrix with labels.

The classification report.

A separator line for readability.

Updates the attribute

self.classification_reportwith the generated classification report.

Note

If the model is multi-label, a confusion matrix is generated for each label.

The optional

scoreparameter can be used to specify and display a scoring metric in the report heading.Designed to work with models that support binary, multi-class, or multi-label predictions.

fit()

- fit(X, y, validation_data=None, score=None)

Fits the model to training data and, if applicable, tunes thresholds and performs early stopping. Allows feature selection and processing steps as part of the pipeline.

- Parameters:

X (

pandas.DataFrameor array-like) – Training features.y (

pandas.Seriesor array-like) – Training target labels.validation_data (tuple of (

pandas.DataFrame,pandas.Series), optional) – Tuple containing validation features and labels. Required for early stopping. Default isNone.score (str, optional) – Optional scoring metric to guide the fitting process. Default is

None.

- Raises:

ValueError – Raised if invalid scoring metrics or parameters are provided.

Description:

This method trains the model with support for both k-fold cross-validation and single train-validation-test workflows.

If feature selection or preprocessing steps are configured, they are applied before fitting.

For certain estimators, early stopping is supported when validation data is provided.

The method dynamically sets model parameters based on tuning results for the specified or default scoring metric.

Behavior:

With K-Fold Cross-Validation:

Resets the estimator and fits the model using k-fold splits.

If a scoring metric is provided, applies it to guide the cross-validation.

Stores cross-validation results in the self.xval_output attribute.

Without K-Fold Cross-Validation:

Resets the estimator and applies feature selection or preprocessing if configured.

Fits the model on training data. If early stopping is enabled, uses validation data to monitor performance and stop training early.

Attributes Used:

self.kfold: Indicates whether k-fold cross-validation is enabled.self.best_params_per_score: Stores tuned parameters for different scoring metrics.self.feature_selection,self.pipeline_steps: Flags for feature selection and preprocessing steps.self.imbalance_sampler: Specifies whether imbalance sampling is applied.self.boost_early: Enables early stopping during training.self.estimator_name: Name of the estimator in the pipeline.

Output:

Updates the class attribute

self.estimatorwith the fitted model.For k-fold cross-validation, stores results in

self.xval_output.

Note

Early stopping requires both validation features and labels.

Feature selection and preprocessing steps are dynamically applied based on the pipeline configuration.

When a custom scoring metric is specified, it must match one of the predefined or user-defined metrics.

return_metrics()

- return_metrics(X, y, optimal_threshold=False, model_metrics=False, print_threshold=False, return_dict=False, print_per_fold=False)

A flexible function to evaluate model performance by generating classification or regression metrics. It provides options to print confusion matrices, classification reports, and regression metrics, and supports optimal threshold display and dictionary outputs.

- Parameters:

X (

pandas.DataFrameor array-like) – The feature matrix for evaluation.y (

pandas.Seriesor array-like) – The target vector for evaluation.optimal_threshold (bool, optional) – Whether to use the optimal threshold for predictions (classification only). Default is

False.model_metrics (bool, optional) – Whether to calculate and print detailed model metrics using

report_model_metrics(). Default isFalse.print_threshold (bool, optional) – Whether to print the optimal threshold used for predictions (classification only). Default is

False.return_dict (bool, optional) – Whether to return the metrics as a dictionary instead of printing them. Default is

False.print_per_fold (bool, optional) – For cross-validation, whether to print metrics for each fold. Default is

False.

- Returns:

A dictionary containing metrics if

return_dict=True; otherwise, the metrics are printed.- Return type:

dict or None

Description:

The

return_metrics()function is designed to be highly adaptable, allowing users to:Print Classification Metrics: Displays a confusion matrix and the accompanying classification report when evaluating a classification model.

Print Regression Metrics: Outputs standard regression metrics (e.g., R², Mean Absolute Error) when evaluating a regression model.

Report Detailed Model Metrics: By setting

model_metrics=True, the function invokesreport_model_metrics()to provide detailed insights into metrics like precision, recall, and AUC-ROC.Display the Optimal Threshold: Setting

print_threshold=Truedisplays the threshold value used for classification predictions, particularly when an optimal threshold has been tuned.Return Results as a Dictionary: If

return_dict=True, the metrics are returned in a structured dictionary, allowing users to programmatically access the results. This is especially useful for further analysis or logging.

Behavior:

Classification Models:

Generates and prints a confusion matrix.

Prints a detailed classification report, including precision, recall, F1-score, and accuracy.

Optionally prints additional model metrics and the optimal threshold.

Regression Models:

Outputs standard regression metrics such as R², Mean Absolute Error, and Root Mean Squared Error.

Cross-Validation:

For k-fold validation, the function aggregates metrics across folds and prints the averaged results. If

print_per_fold=True, metrics for each fold are also printed in addition to the averaged results.

Output:

If

return_dict=True, returns:Classification Models:

A dictionary with:

Classification Report: The classification report as a string.Confusion Matrix: The confusion matrix as an array.Best Features: (Optional) List of the top features if feature selection is enabled.

Regression Models:

A dictionary with:

Regression Report: A dictionary of regression metrics.Best Features: (Optional) List of the top features if feature selection is enabled.

If

return_dict=False, prints the metrics directly to the console.

Examples:

## Example usage for validation metrics: print("Validation Metrics") model.return_metrics( X=X_valid, y=y_valid, optimal_threshold=True, print_threshold=True, model_metrics=True, ) print() ## Example usage for test metrics: print("Test Metrics") model.return_metrics( X=X_test, y=y_test, optimal_threshold=True, print_threshold=True, model_metrics=True, ) print()

Note

This function is suitable for both classification and regression models.

Supports cross-validation workflows by calculating metrics across multiple folds.

Enables users to programmatically access metrics via the dictionary output for custom analysis.

predict()

- predict(X, y=None, optimal_threshold=False)

Makes predictions and predicts probabilities, allowing threshold tuning.

- Parameters:

X (

pandas.DataFrameor array-like) – The feature matrix for prediction.y (

pandas.Seriesor array-like, optional) – The true target labels, required only for k-fold predictions. Default isNone.optimal_threshold (bool, optional) – Whether to use an optimal classification threshold for predictions. Default is

False.

- Returns:

Predicted class labels or predictions adjusted by the optimal threshold.

- Return type:

numpy.ndarrayor array-like- Raises:

ValueError – Raised if invalid inputs or configurations are provided.

Description:

Predicts target values for the input data.

Supports both regression and classification tasks, with specific behavior for each:

For regression: Direct predictions are returned, ignoring thresholds.

For classification: Predictions are adjusted using an optimal threshold when enabled.

If k-fold cross-validation is active, performs predictions for each fold using

cross_val_predict.

Behavior:

With K-Fold Cross-Validation:

Returns predictions based on cross-validated folds.

Without K-Fold Cross-Validation:

Uses the trained model’s

predict()method.Applies the optimal threshold to adjust classification predictions, if specified.

Related Methods:

predict_proba(X, y=None):Predicts probabilities for classification tasks.

Supports k-fold cross-validation using

cross_val_predictwith themethod="predict_proba"option.

Note

Optimal thresholding is useful for fine-tuning classification performance metrics such as F1-score or precision-recall balance.

For classification, the threshold can be tuned for specific scoring metrics (e.g., ROC-AUC).

Works seamlessly with pipelines or directly with the underlying model.

grid_search_param_tuning()

- grid_search_param_tuning(X, y, f1_beta_tune=False, betas=[1, 2])

Performs grid or Bayesian search parameter tuning, optionally tuning F-beta score thresholds for classification.

- Parameters:

X (

pandas.DataFrameor array-like) – The feature matrix for training and validation.y (

pandas.Seriesor array-like) – The target vector corresponding toX.f1_beta_tune (bool, optional) – Whether to tune F-beta score thresholds during parameter search. Default is

False.betas (list of int, optional) – List of beta values to use for F-beta score tuning. Default is

[1, 2].

- Raises:

ValueError – Raised if the provided data or configurations are incompatible with parameter tuning.

KeyError – Raised if required scoring metrics are missing.

Description:

This method tunes hyperparameters for a model using grid search or Bayesian optimization.

Supports tuning F-beta thresholds for classification tasks.

Can handle both k-fold cross-validation and single train-validation-test workflows.

Behavior:

With K-Fold Cross-Validation:

Splits data into k folds using

kfold_splitand performs parameter tuning.Optionally tunes thresholds for F-beta scores on validation splits.

Without K-Fold Cross-Validation:

Performs a train-validation-test split using

train_val_test_split.Applies preprocessing, feature selection, and imbalance sampling if configured.

Tunes parameters and thresholds based on validation scores.

Attributes Used:

self.kfold: Indicates whether k-fold cross-validation is enabled.self.scoring: List of scoring metrics used for evaluation.self.best_params_per_score: Stores the best parameter set for each scoring metric.self.grid: Parameter grid for tuning.self.calibrate: Specifies whether the model calibration is enabled.self.imbalance_sampler: Indicates if imbalance sampling is applied.self.feature_selection: Specifies whether feature selection is applied.self.pipeline_steps: Configuration for preprocessing steps.self.boost_early: Enables early stopping during model training.self.threshold: Stores tuned thresholds for F-beta score optimization.

Output:

Updates the class attribute

self.best_params_per_scorewith the best parameters and scores for each metric.Optionally updates

self.thresholdwith tuned F-beta thresholds.Prints best parameters and scores if

self.displayis enabled.

Note

Threshold tuning requires classification tasks and is not applicable for regression.

Early stopping is supported if

self.boost_earlyis enabled and validation data is provided.Works seamlessly with pipelines for preprocessing and feature selection.

print_selected_best_features()

- print_selected_best_features(X)

Prints and returns the selected top K best features based on the feature selection step.

- Parameters:

X (

pandas.DataFrameor array-like) – The feature matrix used during the feature selection process.- Returns:

A list of the selected features or column indices.

- Return type:

- Raises:

AttributeError – Raised if the feature selection pipeline is not properly configured or trained.

Description:

This method retrieves the top K features selected by the feature selection pipeline.

Prints the names or column indices of the selected features to the console.

Returns the selected features as a list.

Behavior:

For DataFrames:

Prints the names of the selected feature columns.

Returns a list of column names corresponding to the selected features.

For Array-like Data:

Prints the indices of the selected feature columns.

Returns a list of column indices.

Attributes Used:

self.get_feature_selection_pipeline(): Retrieves the feature selection pipeline used for selecting features.

Output:

Prints the selected features or indices to the console.

Returns the selected features as a list.

Note

Assumes that a feature selection pipeline has been configured and trained prior to calling this method.

Designed to work with both

pandas.DataFrameand array-like structures for feature matrices.

tune_threshold_Fbeta()

- tune_threshold_Fbeta(score, y_valid, betas, y_valid_proba, kfold=False)

Tunes classification threshold for optimal F-beta score, balancing precision and recall across various thresholds.

- Parameters:

score (str) – A label or name for the score used to store the best threshold.

y_valid (array-like of shape (n_samples,)) – Ground truth (actual) labels for the validation dataset.

betas (list of float) – A list of beta values to consider when calculating the F-beta score. Beta controls the balance between precision and recall.

y_valid_proba (array-like of shape (n_samples,)) – Predicted probabilities for the positive class in the validation dataset. Used to evaluate thresholds.

kfold (bool, optional) – If

True, returns the best threshold for the given score. If False, updates thethresholdattribute in place. Default isFalse.

- Returns:

The best threshold for the given score if

kfoldisTrue, otherwise returnsNone.- Return type:

float or None

- Raises:

ValueError – Raised if input arrays have mismatched dimensions or invalid beta values.

TypeError – Raised if invalid data types are passed for parameters.

Description:

This method identifies the optimal classification threshold for maximizing the F-beta score.

The F-beta score balances precision and recall, with beta determining the relative weight of recall.

Evaluates thresholds ranging from 0 to 1 (with a step size of 0.01) to find the best threshold for each beta value.

Behavior:

Threshold Evaluation:

For each threshold, computes binary predictions and evaluates the resulting F-beta score.

Penalizes thresholds leading to undesirable outcomes, such as excessive false positives compared to true negatives.

K-Fold Mode:

If

kfold=True, returns the optimal threshold without modifying class attributes.

Non K-Fold Mode:

Updates the

self.thresholdattribute with the optimal threshold for the specified score.

Attributes Used:

self.threshold: Stores the optimal threshold for each scoring metric.self.beta: Stores the beta value corresponding to the maximum F-beta score.

Notes:

The method iterates over thresholds and calculates F-beta scores for each beta value, identifying the best-performing threshold.

Penalizes thresholds where false positives exceed true negatives to ensure practical performance.

Designed to support models evaluated on binary classification tasks.

Example:

optimal_threshold = tune_threshold_Fbeta( score="roc_auc", y_valid=y_valid, betas=[0.5, 1, 2], y_valid_proba=model.predict_proba(X_valid)[:, 1], kfold=False, )

train_val_test_split()

- train_val_test_split(X, y, stratify_y=None, train_size=0.6, validation_size=0.2, test_size=0.2, random_state=3, stratify_cols=None)

Splits data into train, validation, and test sets, supporting stratification by specific columns or the target variable.

- Parameters:

X (

pandas.DataFrameor array-like) – The feature matrix to split.y (

pandas.Seriesor array-like) – The target vector corresponding toX.stratify_y (

pandas.Seriesor None, optional) – Specifies whether to stratify based on the target variable. Default isNone.train_size (float, optional) – Proportion of the data to allocate to the training set. Default is

0.6.validation_size (float, optional) – Proportion of the data to allocate to the validation set. Default is

0.2.test_size (float, optional) – Proportion of the data to allocate to the test set. Default is

0.2.random_state (int, optional) – Random seed for reproducibility. Default is

3.stratify_cols (list,

pandas.DataFrame, or None, optional) – Columns to use for stratification, in addition to or instead ofy. Default isNone.

- Returns:

A tuple containing train, validation, and test sets: (

X_train,X_valid,X_test,y_train,y_valid,y_test).- Return type:

tuple of (

pandas.DataFrame,pandas.Series)- Raises:

ValueError – Raised if the sizes for train, validation, and test do not sum to 1.0 or if invalid stratification keys are provided.

Description:

This function splits data into three sets: train, validation, and test.

Supports stratification based on the target variable (

y) or specific columns (stratify_cols).Ensures the proportions of the split sets are consistent with the specified

train_size,validation_size, andtest_size.

Behavior:

Combines

stratify_colsandy(if both are provided) to create a stratification key.Handles missing values in

stratify_colsby filling with empty strings.Uses a two-step splitting approach:

Splits data into train and combined validation-test sets.

Further splits the combined set into validation and test sets.

Attributes Used:

Handles configurations for stratification and proportional splitting.

Note

The sum of

train_size,validation_size, andtest_sizemust equal1.0.Stratification ensures the distribution of classes or categories is preserved across splits.

The function works seamlessly with both

pandas.DataFrameand array-like data structures.

Example:

X_train, X_valid, X_test, y_train, y_valid, y_test = train_val_test_split( X=features, y=target, stratify_y=target, train_size=0.6, validation_size=0.20, test_size=0.20, random_state=42, stratify_cols=['category_column'] )

get_best_score_params()

- get_best_score_params(X, y)

Retrieves the best hyperparameters for the model based on cross-validation scores for specified metrics.

- Parameters:

X (

pandas.DataFrameor array-like) – The feature matrix for training during hyperparameter tuning.y (

pandas.Seriesor array-like) – The target vector corresponding toX.

- Returns:

None. Updates the class attributes with the best parameters and scores.- Return type:

None- Raises:

ValueError – Raised if

self.gridorself.kfis not properly configured.KeyError – Raised if scoring metrics are missing or invalid.

Description:

This method performs hyperparameter tuning using either grid search, randomized grid search, or Bayesian search.

Identifies the best parameter set for each scoring metric specified in the class’s

scoringattribute.Updates the class attributes with the best estimator and scores.

Supported Search Methods:

Grid Search: Exhaustively searches over all parameter combinations.

Randomized Grid Search: Randomly samples a subset of parameter combinations.

Bayesian Search: Uses Bayesian optimization for hyperparameter tuning.

Behavior:

Randomized Search:

If

self.randomized_gridisTrue, uses`RandomizedSearchCV`to perform hyperparameter tuning.

Bayesian Search:

If

self.bayesianisTrue, usesBayesSearchCVfor Bayesian optimization.Removes any

bayes__prefixed parameters from the grid and uses them as additional arguments forBayesSearchCV.

Grid Search:

Defaults to

GridSearchCVif neitherrandomized_gridnorbayesianis enabled.

After fitting the model:

Updates

self.estimatorandself.test_modelwith the best estimator.Stores the best parameters and score for each scoring metric in

self.best_params_per_score.

Attributes Updated:

self.estimator: Updated with the best model after tuning.self.test_model: Updated with the same best model.self.best_params_per_score: A dictionary storing the best parameters and scores for each scoring metric.

Output:

Prints:

The best parameter set and score for each metric.

A summary of grid scores for all parameter combinations.

Updates class attributes with the tuning results.

Note

Supports custom scoring metrics via

self.custom_scorer.The method assumes

self.kfis a valid cross-validator (e.g.,KFoldorStratifiedKFold) andself.gridis properly defined.Designed to work seamlessly with classification and regression models.

conf_mat_class_kfold()

- conf_mat_class_kfold(X, y, test_model, score=None)

Generates and averages confusion matrices across k-folds, producing a combined classification report.

- Parameters:

X (

pandas.DataFrameor array-like) – The feature matrix for k-fold cross-validation.y (

pandas.Seriesor array-like) – The target vector corresponding toX.test_model (object) – The model to be trained and evaluated on each fold.

score (str, optional) – Optional scoring metric label for reporting purposes. Default is

None.

- Returns:

A dictionary containing the averaged classification report and confusion matrix.

- Return type:

- Raises:

ValueError – Raised if the input data is incompatible with k-fold splitting.

Description:

This method performs k-fold cross-validation to generate confusion matrices for each fold.

Averages the confusion matrices across all folds and produces a combined classification report.

Prints the averaged confusion matrix and classification report.

Behavior:

For each fold in k-fold cross-validation:

Splits the data into training and testing subsets.

Fits the

test_modelon the training subset.Predicts the target values for the testing subset.

Computes the confusion matrix for the fold and appends it to a list.

Aggregates predictions and true labels across all folds.

Averages the confusion matrices and generates an overall classification report.

Output:

Prints:

The averaged confusion matrix across all folds.

The overall classification report across all folds.

Returns:

A dictionary containing:

"Classification Report": The averaged classification report as a dictionary."Confusion Matrix": The averaged confusion matrix as a NumPy array.

Note

Designed for classification tasks evaluated with k-fold cross-validation.

Handles both

pandas.DataFrameand array-like structures forXandy.If

scoreis provided, it is included in the printed report headers.

regression_report_kfold()

- regression_report_kfold(X, y, test_model, score=None)

Generates averaged regression metrics across k-folds.

- Parameters:

X (

pandas.DataFrameor array-like) – The feature matrix for k-fold cross-validation.y (

pandas.Seriesor array-like) – The target vector corresponding toX.test_model (object) – The model to be trained and evaluated on each fold.

score (str, optional) – Optional scoring metric label for reporting purposes. Default is

None.

- Returns:

A dictionary containing averaged regression metrics across all folds.

- Return type:

- Raises:

ValueError – Raised if the input data is incompatible with k-fold splitting.

Description:

This method evaluates regression performance metrics using k-fold cross-validation.

Trains the

test_modelon training splits and evaluates it on validation splits for each fold.Aggregates regression metrics from all folds and calculates their averages.

Behavior:

For each fold in k-fold cross-validation:

Splits the data into training and testing subsets.

Fits the

test_modelon the training subset.Predicts the target values for the testing subset.

Computes regression metrics (e.g., RMSE, MAE, R²) and stores them.

Aggregates metrics across all folds and calculates their mean.

Output:

Prints:

The averaged regression metrics across all folds.

Returns:

A dictionary containing the averaged regression metrics.

Attributes Used:

self.regression_report(): Used to compute regression metrics for each fold.

Note

Designed specifically for regression tasks evaluated with k-fold cross-validation.

Handles both

pandas.DataFrameand array-like structures forXandy.

regression_report()

- regression_report(y_true, y_pred, print_results=True)

Generates a regression report with metrics like Mean Absolute Error, R-squared, and Root Mean Squared Error.

- Parameters:

y_true (array-like) – Ground truth (actual) values for the target variable.

y_pred (array-like) – Predicted values for the target variable.

print_results (bool, optional) – Whether to print the regression metrics to the console. Default is

True.

- Returns:

A dictionary containing various regression metrics.

- Return type:

- Raises:

ValueError – Raised if

y_trueandy_predhave mismatched dimensions.

Description:

Computes common regression metrics to evaluate the performance of a regression model.

Metrics include R², explained variance, mean absolute error (MAE), median absolute error, mean squared error (MSE), and root mean squared error (RMSE).

Metrics Computed:

R²: Coefficient of determination, indicating the proportion of variance in the dependent variable explained by the independent variable(s).Explained Variance: Measures the proportion of variance explained by the model.Mean Absolute Error (MAE): Average of the absolute differences between actual and predicted values.Median Absolute Error: Median of the absolute differences between actual and predicted values.Mean Squared Error (MSE): Average of the squared differences between actual and predicted values.Root Mean Squared Error (RMSE): Square root of the mean squared error.

Behavior:

Computes all metrics and stores them in a dictionary.

Optionally prints the metrics to the console, formatted for easy readability.

Output:

Prints:

A formatted list of regression metrics if

print_results=True.

Returns:

A dictionary containing the computed metrics.

Note

This method is designed for regression tasks and is not applicable to classification models.

The returned dictionary can be used for further analysis or logging.

report_model_metrics()

- report_model_metrics(model, X_valid=None, y_valid=None, threshold=0.5, print_results=True, print_per_fold=False)

Generate a DataFrame of model performance metrics, adapting to regression, binary classification, or multiclass classification problems.

Key Features:

Handles regression, binary classification, and multiclass classification tasks.

Supports K-Fold cross-validation with optional metrics printing for individual folds.

Adapts metrics calculation based on the model’s

model_typeattribute.

- Parameters:

model (object) – The trained model with the necessary attributes and methods for prediction, including

predict_probaorpredict, and attributes likemodel_typeandmulti_label(for multiclass classification).X_valid (pandas.DataFrame or array-like, optional) – Feature set used for validation. If performing K-Fold validation, this represents the entire dataset. Default is

None.y_valid (pandas.Series or array-like, optional) –

Truelabels for the validation dataset. If performing K-Fold validation, this corresponds to the entire dataset. Default isNone.threshold (float, optional) – Classification threshold for binary classification models. Predictions above this threshold are classified as the positive class. Default is

0.5.print_results (bool, optional) – Whether to print the metrics report. Default is

True.print_per_fold (bool, optional) – If performing K-Fold validation, specifies whether to print metrics for each fold. Default is

False.

- Return type:

pandas.DataFrame

- Raises:

ValueError – Raised if the provided

model_typeis invalid or incompatible with the data.AttributeError – Raised if the required attributes or methods are missing from the model.

TypeError – Raised for incorrect parameter types, such as non-numeric thresholds.

- Returns:

A pandas DataFrame containing calculated performance metrics. The structure of the DataFrame depends on the model type:

Regression: Includes Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), R² Score, and Explained Variance.

Binary Classification: Includes Precision (PPV), Average Precision, Sensitivity, Specificity, AUC-ROC, and Brier Score.

Multiclass Classification: Includes Precision, Recall, and F1-Score for each class, along with weighted averages and accuracy.

Note

For regression models, standard regression metrics are calculated.

For binary classification models, threshold-based metrics are computed using probabilities from

predict_proba.For multiclass classification models, metrics are calculated for each class, along with weighted averages.

K-Fold cross-validation aggregates metrics across folds, with an option to print results for each fold.

Examples:

## Example for binary classification: metrics_df = report_model_metrics(model, X_valid=X_test, y_valid=y_test, threshold=0.5) ## Example for regression: metrics_df = report_model_metrics(model, X_valid=X_test, y_valid=y_test) ## Example for K-Fold validation: metrics_df = report_model_metrics(model, X_valid=X, y_valid=y, print_per_fold=True)

find_optimal_threshold_beta()

- find_optimal_threshold_beta(y, y_proba, target_metric=None, target_score=None, beta_value_range=np.linspace(0.01, 4, 400))

Determine the optimal threshold and beta value for classification models by iteratively tuning the decision boundary to meet a target precision or recall score.

Key Features:

Iteratively finds an optimal threshold using an expanding delta range if an exact match isn’t found.

Supports tuning based on precision or recall.

Uses a progressive delta expansion strategy to allow some flexibility in finding an optimal threshold.

Terminates with a warning if delta exceeds 0.2.

- Parameters:

y (numpy.ndarray or list) – The true labels of the dataset, expected to be binary (0 or 1).

y_proba (numpy.ndarray or list) – The predicted probabilities outputted by a model for the positive class.

target_metric (str, optional) – The performance metric to optimize for. Supports either

"precision"or"recall".target_score (float, optional) – The desired precision or recall score that the function attempts to achieve.

beta_value_range (numpy.ndarray, optional) – The range of beta values to test, controlling the balance between precision and recall. Defaults to an array of 400 values between

0.01and4.- Raises:

ValueError – If

target_metricis not one of"precision"or"recall".Exception – If delta exceeds 0.2, meaning an optimal threshold could not be found within tolerance.

- Return type:

tuple or None

- Returns:

A tuple containing:

threshold (float): The optimal decision threshold for classification.

beta (float): The beta value at which the optimal threshold was found.

Returns

Noneif no suitable threshold is found within the acceptable delta range.Note

Uses the

threshold_tunefunction internally to determine the best threshold for each beta.If no exact match is found initially, the function gradually increases

deltain increments of0.01.If delta exceeds

0.2, the function raises an exception indicating failure to find an optimal threshold.Examples:

## Example usage for optimizing precision threshold, beta = find_optimal_threshold_beta( y=y_test, y_proba=y_probabilities, target_metric="precision", target_score=0.8 ) ## Example usage for optimizing recall threshold, beta = find_optimal_threshold_beta( y=y_test, y_proba=y_probabilities, target_metric="recall", target_score=0.85 ) ## Example handling a case where no exact match is found try: threshold, beta = find_optimal_threshold_beta( y=y_test, y_proba=y_probabilities, target_metric="precision", target_score=0.95 ) except Exception as e: print(f"Could not find an optimal threshold: {e}")

Helper Functions

kfold_split()

- kfold_split(classifier, X, y, stratify=False, scoring=['roc_auc'], n_splits=10, random_state=3)

Splits data using k-fold or stratified k-fold cross-validation.

- Parameters:

classifier (object) – The classifier or model to be evaluated during cross-validation.

X (pandas.DataFrame or array-like) – Features dataset to split into k-folds.

y (pandas.Series or array-like) – Target dataset corresponding to

X.stratify (bool, optional) – Whether to use stratified k-fold cross-validation. If

True, usesStratifiedKFold. Otherwise, usesKFold. Default isFalse.scoring (list of str, optional) – Scoring metric(s) to evaluate during cross-validation. Default is

["roc_auc"].n_splits (int, optional) – Number of splits/folds to create for cross-validation. Default is

10.random_state (int, optional) – Random seed for reproducibility. Default is

3.

- Returns:

A

KFoldorStratifiedKFoldcross-validator object based on thestratifyparameter.- Return type:

sklearn.model_selection.KFoldorsklearn.model_selection.StratifiedKFold- Raises:

ValueError – Raised if invalid parameters (e.g., negative

n_splits) are provided.

Note

Use

stratify=Truefor datasets where maintaining the proportion of classes in each fold is important.Use

stratify=Falsefor general k-fold splitting.

get_cross_validate()

- get_cross_validate(classifier, X, y, kf, scoring=['roc_auc'])

Performs cross-validation using the provided classifier, dataset, and cross-validation strategy.

- Parameters:

classifier (object) – The classifier or model to be evaluated during cross-validation.

X (

pandas.DataFrameor array-like) – Features dataset to use during cross-validation.y (

pandas.Seriesor array-like) – Target dataset corresponding toX.kf (

sklearn.model_selection.KFoldorsklearn.model_selection.StratifiedKFold) – Cross-validator object, such asKFoldorStratifiedKFold, specifying the cross-validation strategy.scoring (list of str, optional) – Scoring metric(s) to evaluate during cross-validation. Default is

["roc_auc"].

- Returns:

A dictionary containing cross-validation results, including train and test scores for each fold.

Returned Dictionary Keys:

test_score: Test scores for each fold.train_score: Training scores for each fold.estimator: The estimator fitted on each fold.fit_time: Time taken to fit the model on each fold.score_time: Time taken to score the model on each fold.

- Return type:

- Raises:

ValueError – Raised if invalid

kforscoringparameters are provided.

Note

Supports multiple scoring metrics, which can be specified as a list (e.g.,

["accuracy", "roc_auc"]).Returns additional information such as train scores and estimators for further analysis.

Ensure the classifier supports the metrics defined in the

scoringparameter.

_confusion_matrix_print()

- _confusion_matrix_print(conf_matrix, labels)

Prints the formatted confusion matrix for binary classification.

- Parameters:

conf_matrix (numpy.ndarray or array-like) – The confusion matrix to print, typically a 2x2 numpy array or similar structure.

labels (list of str) – A list of labels corresponding to the confusion matrix entries in the order

[TN, FP, FN, TP].

Description:

Formats and prints a binary classification confusion matrix with labeled cells for True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN).

Includes additional formatting to enhance readability, such as aligned columns and labeled rows.

Output:

The function prints a structured table representation of the confusion matrix directly to the console.

print_pipeline()

- print_pipeline(pipeline)

Displays an ASCII representation of the pipeline steps for visual clarity.

- Parameters:

pipeline (

sklearn.pipeline.Pipelineor object with astepsattribute) – The pipeline object containing different steps to display. Typically, asklearn.pipeline.Pipelineobject or similar structure.

Description:

This function iterates over the steps in a pipeline and displays each step in a visually formatted ASCII art representation.

For each pipeline step:

Displays the step name and its class name in a boxed format.

Connects steps with vertical connectors (│) and arrows (▼) for clarity.

Dynamically adjusts box width based on the longest step name or class name to maintain alignment.

Output:

The function prints the pipeline structure directly to the console, providing an easy-to-read ASCII visualization.

Note

If the pipeline has no steps or lacks a

stepsattribute, the function prints a message:"No steps found in the pipeline!".Designed for readability, especially in terminal environments.

Pipeline Management

The pipeline in the model tuner class is designed to automatically organize steps into three categories: preprocessing, feature selection, and imbalanced sampling. The steps are ordered in the following sequence:

Preprocessing:

Imputation

Scaling

Other preprocessing steps

Imbalanced Sampling

Feature Selection

Classifier

The pipeline_assembly method automatically sorts the steps into this order.

Specifying Pipeline Steps

Pipeline steps can be specified in multiple ways. For example, if naming a pipeline step then specify like so:

pipeline_steps = ['imputer', SimpleImputer()]

Naming each step is optional and the steps can also be specified like so:

pipeline_steps = [SimpleImputer(), StandardScalar(), rfe()]

If no name is assigned, the step will be renamed automatically to follow the convention

step_0,step_1, etc.Column transformers can also be included in the pipeline and are automatically categorized under the preprocessing section.

Helper Methods for Pipeline Extraction

To support advanced use cases, the model tuner provides helper methods to extract parts of the pipeline for later use. For example, when generating SHAP plots, users might only need the preprocessing section of the pipeline.

Here are some of the available methods:

- get_preprocessing_and_feature_selection_pipeline()

Extracts both the preprocessing and feature selection parts of the pipeline.

Definition:

def get_preprocessing_and_feature_selection_pipeline(self): steps = [ (name, transformer) for name, transformer in self.estimator.steps if name.startswith("preprocess_") or name.startswith("feature_selection_") ] return self.PipelineClass(steps)

- get_feature_selection_pipeline()

Extracts only the feature selection part of the pipeline.

Definition:

def get_feature_selection_pipeline(self): steps = [ (name, transformer) for name, transformer in self.estimator.steps if name.startswith("feature_selection_") ] return self.PipelineClass(steps)

- get_preprocessing_pipeline()

Extracts only the preprocessing part of the pipeline.

Definition:

def get_preprocessing_pipeline(self): preprocessing_steps = [ (name, transformer) for name, transformer in self.estimator.steps if name.startswith("preprocess_") ] return self.PipelineClass(preprocessing_steps)

Extracting Feature names

When performing feature selection with tools such as Recursive Feature Elimination (RFE) or when using ColumnTransformers the feature names that are fed to the model can be obscured and different from the original. To get the transformed feature names or to extract the feature names that were selected by the feature selection process we have provided the get_feature_names() method.

- get_feature_names()

Extracts the feature names after they have been processed by the pipeline. This does not work if a ColumnTransformerm, OneHotEncoder or some form of feature selection is not present in the pipeline.

Definition:

def get_feature_names(self): if self.pipeline_steps is None or not self.pipeline_steps: raise ValueError("You must provide pipeline steps to use get_feature_names") if hasattr(self.estimator, "steps"): estimator_steps = self.estimator[:-1] else: estimator_steps = self.estimator.estimator[:-1] return estimator_steps.get_feature_names_out().tolist()

Example Usage:

### Assuming you already have fitted a model with some form of feature selection ### or feature transformation in the pipeline e.g. one hot encoder: feat_names = model.get_feature_names()

Summary

By organizing pipeline steps automatically and providing helper methods for extraction, the model tuner class offers flexibility and ease of use for building and managing complex pipelines. Users can focus on specifying the steps, and the tuner handles naming, sorting, and category assignments seamlessly.

Binary Classification

Binary classification is a type of supervised learning where a model is trained

to distinguish between two distinct classes or categories. In essence, the model

learns to classify input data into one of two possible outcomes, typically

labeled as 0 and 1, or negative and positive. This is commonly used in

scenarios such as spam detection, disease diagnosis, or fraud detection.

The model_tuner library handles binary classification seamlessly through the Model

class. Users can specify a binary classifier as the estimator, and the library

takes care of essential tasks like data preprocessing, model calibration, and

cross-validation. The library also provides robust support for evaluating the

model’s performance using a variety of metrics, such as accuracy, precision,

recall, and ROC-AUC, ensuring that the model’s ability to distinguish between the

two classes is thoroughly assessed. Additionally, the library supports advanced

techniques like imbalanced data handling and model calibration to fine-tune

decision thresholds, making it easier to deploy effective binary classifiers in

real-world applications.

AIDS Clinical Trials Group Study

The UCI Machine Learning Repository is a well-known resource for accessing a wide range of datasets used for machine learning research and practice. One such dataset is the AIDS Clinical Trials Group Study dataset, which can be used to build and evaluate predictive models.

You can easily fetch this dataset using the ucimlrepo package. If you haven’t installed it yet, you can do so by running the following command:

pip install ucimlrepo

Once installed, you can quickly load the AIDS Clinical Trials Group Study dataset with a simple command:

from ucimlrepo import fetch_ucirepo

Step 1: Import Necessary libraries

import pandas as pd

import numpy as np

from ucimlrepo import fetch_ucirepo

from xgboost import XGBClassifier

from model_tuner import Model

Step 2: Load the dataset, define X, y

## Fetch dataset

aids_clinical_trials_group_study_175 = fetch_ucirepo(id=890)

## Data (as pandas dataframes)

X = aids_clinical_trials_group_study_175.data.features

y = aids_clinical_trials_group_study_175.data.targets

y = y.squeeze() ## convert a DataFrame to Series when single column

Step 3: Check for zero-variance columns and drop accordingly

## Check for zero-variance columns and drop them

zero_variance_columns = X.columns[X.var() == 0]

if not zero_variance_columns.empty:

X = X.drop(columns=zero_variance_columns)

Step 4: Create an instance of the XGBClassifier

## Creating an instance of the XGBClassifier

xgb_name = "xgb"

xgb = XGBClassifier(

objective="binary:logistic",

random_state=222,

)

Step 5: Define Hyperparameters for XGBoost

In binary classification, we configure the XGBClassifier for tasks where the

model predicts between two classes (e.g., positive/negative or 0/1). Here, we

define a grid of hyperparameters to fine-tune the XGBoost model.

The following code defines the hyperparameter grid and configuration:

xgbearly = True

tuned_parameters_xgb = {

f"{xgb_name}__max_depth": [3, 10, 20, 200, 500],

f"{xgb_name}__learning_rate": [1e-4],

f"{xgb_name}__n_estimators": [1000],

f"{xgb_name}__early_stopping_rounds": [100],

f"{xgb_name}__verbose": [0],

f"{xgb_name}__eval_metric": ["logloss"],

}

## Define model configuration

xgb_definition = {

"clc": xgb,

"estimator_name": xgb_name,

"tuned_parameters": tuned_parameters_xgb,

"randomized_grid": False,

"n_iter": 5, ## Number of iterations if randomized_grid=True

"early": xgbearly,

}

Key Configurations

Hyperparameter Grid:

max_depth: Limits the depth of each decision tree to prevent overfitting.learning_rate: Controls the impact of each boosting iteration; smaller values require more boosting rounds.n_estimators: Specifies the total number of boosting rounds.verbose: Controls output during training; set to0for silent mode or1to display progress.eval_metric: Measures model performance (e.g.,loglossfor binary classification), evaluating the negative log-likelihood.early_stopping_rounds: Halts training early if validation performance does not improve after the specified number of rounds.

General Settings:

Use

randomized_grid=Falseto perform exhaustive grid search.Set the number of iterations for randomized search with

n_iterif needed.

The grid search will explore the parameter combinations to find the optimal configuration for binary classification tasks.

Note

The verbose parameter in XGBoost allows you to control the level of output during training:

Set to

0orFalse: Suppresses all training output (silent mode).Set to

1orTrue: Displays progress and evaluation metrics during training.

This can be particularly useful for monitoring model performance when early stopping is enabled.

Important

When defining hyperparameters for boosting algorithms, frameworks like

XGBoost allow straightforward configuration, such as specifying n_estimators

to control the number of boosting rounds. However, CatBoost introduces certain

pitfalls when this parameter is defined.

Refer to the important caveat regarding this scenario for further details.

Step 6: Initialize and configure the Model

XGBClassifier inherently handles missing values (NaN) without requiring explicit

imputation strategies. During training, XGBoost treats missing values as a

separate category and learns how to route them within its decision trees.

Therefore, passing a SimpleImputer or using an imputation strategy is unnecessary

when using XGBClassifier.

model_type = "xgb"

clc = xgb_definition["clc"]

estimator_name = xgb_definition["estimator_name"]

tuned_parameters = xgb_definition["tuned_parameters"]

n_iter = xgb_definition["n_iter"]

rand_grid = xgb_definition["randomized_grid"]

early_stop = xgb_definition["early"]

kfold = False

calibrate = True

## Initialize model_tuner

model_xgb = Model(

name=f"AIDS_Clinical_{model_type}",

estimator_name=estimator_name,

calibrate=calibrate,

estimator=clc,

model_type="classification",

kfold=kfold,

stratify_y=True,

stratify_cols=["gender", "race"],

grid=tuned_parameters,

randomized_grid=rand_grid,

boost_early=early_stop,

scoring=["roc_auc"],

random_state=222,

n_jobs=2,

)

Step 7: Perform grid search parameter tuning and retrieve split data

## Perform grid search parameter tuning

model_xgb.grid_search_param_tuning(X, y, f1_beta_tune=True)

## Get the training, validation, and test data

X_train, y_train = model_xgb.get_train_data(X, y)

X_valid, y_valid = model_xgb.get_valid_data(X, y)

X_test, y_test = model_xgb.get_test_data(X, y)

With the model configured, the next step is to perform grid search parameter tuning

to find the optimal hyperparameters for the XGBClassifier. The

grid_search_param_tuning method will iterate over all combinations of

hyperparameters specified in tuned_parameters, evaluate each one using the

specified scoring metric, and select the best performing set.

In this example, we pass an additional argument, f1_beta_tune=True, which

adjusts the F1 score to weigh precision and recall differently during

hyperparameter optimization.

Note

For a more in depth discussion on threshold tuning, refer to this section.

Why use f1_beta_tune=True?

Standard F1-Score: Balances precision and recall equally (

beta=1).Custom Beta Values:

With

f1_beta_tune=True, the model tunes the decision threshold to optimize a custom F1 score using the beta value specified internally.This is useful in scenarios where one metric (precision or recall) is more critical than the other.

This method will:

Split the Data: The data will be split into training and validation sets. Since

stratify_y=True, the class distribution will be maintained across splits.After tuning, retrieve the training, validation, and test splits using:

get_train_datafor training data.get_valid_datafor validation data.get_test_datafor test data.

Iterate Over Hyperparameters: All combinations of hyperparameters defined in

tuned_parameterswill be tried sincerandomized_grid=False.Early Stopping: With

boost_early=Trueandearly_stopping_roundsset in the hyperparameters, the model will stop training early if the validation score does not improve.Optimize for Scoring Metric: The model uses

roc_auc(ROC AUC) as the scoring metric suitable for binary classification.Select Best Model: The hyperparameter set that yields the best validation score will be selected.

Pipeline Steps:

┌─────────────────┐

│ Step 1: xgb │

│ XGBClassifier │

└─────────────────┘

100%|██████████| 5/5 [00:47<00:00, 9.43s/it]

Fitting model with best params and tuning for best threshold ...

100%|██████████| 2/2 [00:00<00:00, 2.87it/s]Best score/param set found on validation set:

{'params': {'xgb__early_stopping_rounds': 100,

'xgb__eval_metric': 'logloss',

'xgb__learning_rate': 0.0001,

'xgb__max_depth': 3,

'xgb__n_estimators': 999},

'score': 0.9260891500474834}

Best roc_auc: 0.926

Step 8: Fit the model

In this step, we train the XGBClassifier using the training data and monitor

performance on the validation data during training.

model_xgb.fit(

X_train,

y_train,

validation_data=[X_valid, y_valid],

)

Note

The inclusion of validation_data allows XGBoost to:

Monitor Validation Performance: XGBoost evaluates the model’s performance on the validation set after each boosting round using the specified evaluation metric (e.g.,

logloss).Enable Early Stopping: If

early_stopping_roundsis defined, training will stop automatically if the validation performance does not improve after a set number of rounds, preventing overfitting and saving computation time.

Step 9: Return metrics (optional)

Hint

Use the return metrics function to evaluate the model by printing the output.

# ------------------------- VALID AND TEST METRICS -----------------------------

print("Validation Metrics")

model_xgb.return_metrics(

X=X_valid,

y=y_valid,

optimal_threshold=True,

print_threshold=True,

model_metrics=True,

)

print()

print("Test Metrics")

model_xgb.return_metrics(

X=X_test,

y=y_test,

optimal_threshold=True,

print_threshold=True,

model_metrics=True,

)

print()

Validation Metrics

Confusion matrix on set provided:

--------------------------------------------------------------------------------

Predicted:

Pos Neg

--------------------------------------------------------------------------------

Actual: Pos 93 (tp) 11 (fn)

Neg 76 (fp) 248 (tn)

--------------------------------------------------------------------------------

********************************************************************************

Report Model Metrics: xgb

Metric Value

0 Precision/PPV 0.550296

1 Average Precision 0.802568

2 Sensitivity 0.894231

3 Specificity 0.765432

4 AUC ROC 0.926089

5 Brier Score 0.166657

********************************************************************************

--------------------------------------------------------------------------------

precision recall f1-score support

0 0.96 0.77 0.85 324

1 0.55 0.89 0.68 104

accuracy 0.80 428

macro avg 0.75 0.83 0.77 428

weighted avg 0.86 0.80 0.81 428

--------------------------------------------------------------------------------

Optimal threshold used: 0.26

Test Metrics

Confusion matrix on set provided:

--------------------------------------------------------------------------------

Predicted:

Pos Neg

--------------------------------------------------------------------------------

Actual: Pos 99 (tp) 6 (fn)

Neg 82 (fp) 241 (tn)

--------------------------------------------------------------------------------

********************************************************************************

Report Model Metrics: xgb

Metric Value

0 Precision/PPV 0.546961

1 Average Precision 0.816902

2 Sensitivity 0.942857

3 Specificity 0.746130

4 AUC ROC 0.934306

5 Brier Score 0.167377

********************************************************************************

--------------------------------------------------------------------------------

precision recall f1-score support

0 0.98 0.75 0.85 323

1 0.55 0.94 0.69 105

accuracy 0.79 428

macro avg 0.76 0.84 0.77 428

weighted avg 0.87 0.79 0.81 428

--------------------------------------------------------------------------------

Optimal threshold used: 0.26

Note

A detailed classification report is also available at this stage for review. To print and examine it, refer to this Model Metrics section for guidance on accessing and interpreting the report.

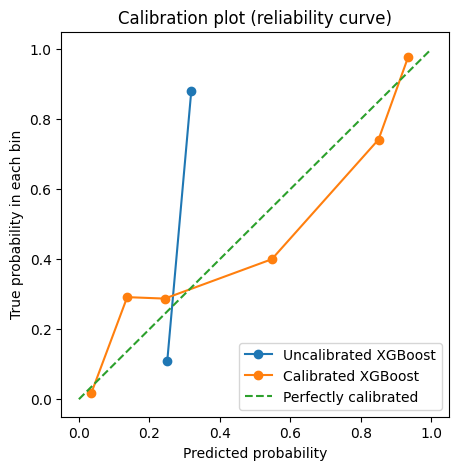

Step 10: Calibrate the model (if needed)

See this section for more information on model calibration.

import matplotlib.pyplot as plt

from sklearn.calibration import calibration_curve

## Get the predicted probabilities for the validation data from uncalibrated model

y_prob_uncalibrated = model_xgb.predict_proba(X_test)[:, 1]

## Compute the calibration curve for the uncalibrated model

prob_true_uncalibrated, prob_pred_uncalibrated = calibration_curve(

y_test,

y_prob_uncalibrated,

n_bins=10,

)

## Calibrate the model

if model_xgb.calibrate:

model_xgb.calibrateModel(X, y, score="roc_auc")

## Predict on the validation set

y_test_pred = model_xgb.predict_proba(X_test)[:, 1]

Change back to CPU

Confusion matrix on validation set for roc_auc

--------------------------------------------------------------------------------

Predicted:

Pos Neg

--------------------------------------------------------------------------------

Actual: Pos 74 (tp) 30 (fn)

Neg 20 (fp) 304 (tn)

--------------------------------------------------------------------------------

precision recall f1-score support

0 0.91 0.94 0.92 324

1 0.79 0.71 0.75 104

accuracy 0.88 428

macro avg 0.85 0.82 0.84 428

weighted avg 0.88 0.88 0.88 428

--------------------------------------------------------------------------------

roc_auc after calibration: 0.9260891500474834

## Get the predicted probabilities for the validation data from calibrated model

y_prob_calibrated = model_xgb.predict_proba(X_test)[:, 1]

## Compute the calibration curve for the calibrated model

prob_true_calibrated, prob_pred_calibrated = calibration_curve(

y_test,

y_prob_calibrated,

n_bins=10,

)

## Plot the calibration curves

plt.figure(figsize=(5, 5))

plt.plot(

prob_pred_uncalibrated,

prob_true_uncalibrated,

marker="o",

label="Uncalibrated XGBoost",

)

plt.plot(

prob_pred_calibrated,

prob_true_calibrated,

marker="o",

label="Calibrated XGBoost",

)

plt.plot(

[0, 1],

[0, 1],

linestyle="--",

label="Perfectly calibrated",

)

plt.xlabel("Predicted probability")

plt.ylabel("True probability in each bin")

plt.title("Calibration plot (reliability curve)")

plt.legend()

plt.show()

F1 Beta Threshold Tuning

In binary classification, selecting an optimal classification threshold is crucial for achieving the best balance between precision and recall. The default threshold of 0.5 may not always yield optimal performance, especially when dealing with imbalanced datasets. F1 Beta threshold tuning helps adjust this threshold to maximize the F-beta score, which balances precision and recall according to the importance assigned to each through the beta parameter.

Note

To better understand the impact of threshold tuning on model results, see the Threshold Tuning Considerations section.

Understanding F1 Beta Score

The F-beta score is a generalization of the F1-score that allows you to weigh recall more heavily than precision (or vice versa) based on the beta parameter:

F1-Score (beta = 1): Equal importance to precision and recall.

F-beta > 1: More emphasis on recall. Useful when false negatives are more critical (e.g., disease detection).

F-beta < 1: More emphasis on precision. Suitable when false positives are costlier (e.g., spam detection).

Example usage: default (beta = 1)

Setting up the Model object ready for tuning.

from xgboost import XGBClassifier

xgb_name = "xgb"

xgb = XGBClassifier(

objective="binary:logistic"

random_state=222,

)

tuned_parameters_xgb = {

f"{xgb_name}__max_depth": [3, 10, 20, 200, 500],

f"{xgb_name}__learning_rate": [1e-4],

f"{xgb_name}__n_estimators": [1000],

f"{xgb_name}__early_stopping_rounds": [100],

f"{xgb_name}__verbose": [0],

f"{xgb_name}__eval_metric": ["logloss"],

}

xgb_model = Model(

name=f"Threshold Example Model",

estimator_name=xgb_name,

calibrate=False,

model_type="classification",

estimator=xgb,

kfold=False,

stratify_y=True,

stratify_cols=False,

grid=tuned_parameters_xgb,

randomized_grid=False,

boost_early=False,

scoring=["roc_auc"],

random_state=222,

n_jobs=2,

)

In the grid_search_param_tuning use the f1_beta_tune variable when using

grid_search_param_tuning(). Set this to True to enable tuning.

xgb_model.grid_search_param_tuning(X, y, f1_beta_tune=True)

This will find the best hyperparameters and then find the best threshold for these balancing both precision and recall. The threshold is stored in the Model object. To access this:

xgb_model.threshold

This will give the best threshold found for each score specified in the Model object.

When using methods to return metrics or report metrics and an optimal threshold was used make sure to remember to specify this in them for example:

xgb_model.return_metrics(

X_valid,

y_valid,

optimal_threshold=True,

print_threshold=True,

model_metrics=True,

)

Example usage: custom betas (higher recall)

If we want to have a higher recall score and care less about precision then we increase

the beta value. This looks very similar to the previous example except that when we

use f1_beta_tune, we also set a beta value like so:

xgb_model.grid_search_param_tuning(X, y, f1_beta_tune=True, betas=[2])

Setting the beta value to 2 will priortise increasing the recall over the precision.

Example usage: custom betas (higher precision)

If we want to have a higher precision score and care less about recall then we decrease the beta value. This looks very similar to the previous example except that we set the beta value to less than 1.

xgb_model.grid_search_param_tuning(X, y, f1_beta_tune=True, betas=[0.5])

Setting the beta value to 0.5 will priortise increasing the precision over the recall.

Optimizing Model Threshold for Precision/Recall Trade-off

This function helps fine-tune a saved model’s decision threshold to maximize precision or recall using a beta-weighted approach.

Note

See this section for a more detailed explanation with contextual examples.

threshold, beta = find_optimal_threshold_beta(

y_valid,

model.predict_proba(X_valid)[:, 1],

target_metric="precision",

target_score=0.5,

beta_value_range=np.linspace(0.01, 4, 40),

)

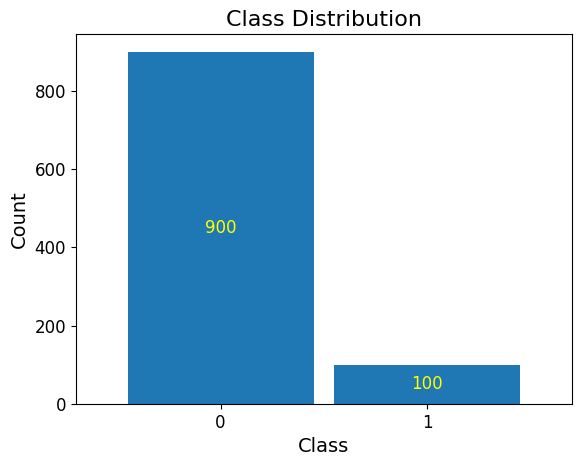

Imbalanced Learning

In machine learning, imbalanced datasets are a frequent challenge, especially in real-world scenarios. These datasets have an unequal distribution of target classes, with one class (e.g., fraudulent transactions, rare diseases, or other low-frequency events) being underrepresented compared to the majority class. Models trained on imbalanced data often struggle to generalize, as they tend to favor the majority class, leading to poor performance on the minority class.

To mitigate these issues, it is crucial to:

Understand the nature of the imbalance in the dataset.

Apply appropriate resampling techniques (oversampling, undersampling, or hybrid methods).

Use metrics beyond accuracy, such as precision, recall, and F1-score, to evaluate model performance fairly.

Generating an imbalanced dataset

Demonstrated below are the steps to generate an imbalanced dataset using

make_classification from the sklearn.datasets module. The following

parameters are specified: